Ultimate Guide to Visual Testing with Playwright

As your web app matures, it becomes challenging to ensure your GUI doesn’t break with any given update. There are a lot of browsers and devices, and countless states for every one of your components. Unit tests ensure your code remains consistent, and E2E tests will ensure your system remains consistent, but neither will catch visual anomalies, layout issues, or platform compatibility issues.

Enter visual testing. Visual tests store snapshots of your app’s appearance, and compares them to the current state of your app. No more surprise bugs. No more developing in three different browsers and 12 different breakpoints. Visual testing automates the process, ensuring your app looks exactly right, all the time.

This article will help you grow from newbie to master visual tester. Even experts will find some benefits in the later sections, as we dive into unique strategies for testing, local development, and CI/CD pipelines.

This article will rely on Playwright, a truly awesome, open-source tool for integration tests with support for all browsers and many programming languages. It’s great for visual testing. If you’re not already using Playwright, you should be.

Visual Testing F.A.Q.

Let’s start by exploring some common questions about visual testing. Just want the code? Jump to Getting Started with Visual Testing.

What is visual testing?

Visual testing is the practice of automatically verifying an app’s visual integrity by comparing its current state to a baseline images. It ensures that changes to the code don’t introduce unexpected results in the UI. It can also be used to ensure consistency across different browsers and devices as well as ensure your app remains accessible and beautiful.

Visual testing is sometimes referred to as visual regression testing, automated visual testing, and snapshot testing.

What are the benefits of visual testing?

Visual testing ensures your app remains functional and attractive, even during rapid development. Visual tests catch bugs that other testing methods completely miss. And because the process is automated, visual testing saves an incredible amount of time and effort in backtracking and bug-fixing.

What are the best practices for visual testing?

Visual testing is a simple process. You simply store snapshots of your app and components in various states, then compare those snapshots to the application after code changes. So long as you run your tests regularly and use a good strategy for updating snapshots, you’ll have no problem reaping the rewards of visual testing.

What is the difference between visual testing and unit testing?

While unit tests focus on the inputs and outputs of specific code interfaces, visual tests focus on the user interface itself. Unit tests are great for verifying core behavior and edge-cases, but only visual tests ensure user can trigger those functions and see the results.

What is the difference between visual testing and E2E testing?

E2E tests ensure that your application’s views and components behave as expected, but visual tests ensure those views and components appear as expected.

E2E tests target elements using DOM selectors, while humans use their eyes. Thus, while E2E tests can confirm that a button is clickable, only visual tests can confirm that the button is visible and in the right place.

When should I use visual testing?

Visual tests grow increasingly valuable as an application evolves. Early in development, your UI is in constant flux, so visual testing doesn’t offer great value. But as soon as your app begins to stabilize, visual testing becomes a game-changer.

I recommend adding visual tests incrementally to views or components you haven’t changed in a few months, but that you need to change today. Before you begin coding, add visual tests for components that should not change as a result of your current work. Then code with confidence!

Is visual testing worth it?

Yes. As your app evolves, your visual tests become essential to productivity. For example, you might set up a test suite that simply compares the same view across different browsers and screen sizes. With these tests in place, you can confidently make changes to your app without having three browsers open at all times.

What is the best tool for visual testing?

Playwright is widely regarded as the best test runner for browser automation and integration testing. Visual tests are no exception. With robust configuration options, support for all browsers and many programming languages, an intuitive API, and a huge community, there’s no reason to look anywhere else.

Getting Started with Visual Testing

Let’s start by setting up your repo, then we’ll write some tests.

This section should take under 10 minutes to get going. Before reading further, I highly recommend getting set up, as later in the guide, you’ll be able to experiment with advanced practices hands-on.

1. Install dependencies

If you already have Playwright installed, skip this step.

Otherwise, install your dependencies and create a sample test file:

# Install node dependencies

npm install -D @playwright/test

npm install -D typescript

# Install playwright browsers

npx playwright install

# Create the test file

mkdir -p tests

touch tests/homepage.spec.ts

2. Write your first visual test

Open tests/homepage.spec.ts and add the following code:

import {test, expect} from '@playwright/test';

test('home page visual test', async ({page}) => {

await page.goto('https://www.browsercat.com');

await expect(page).toHaveScreenshot();

});

3. Run your visual tests

Run your tests with the following command:

npx playwright test

The first time your run your tests, they will fail with an error message like this:

Error: A snapshot doesn't exist at {TEST_OUTPUT_PATH}, writing actual.

This is expected. Since Playwright has nothing to compare expect(page).toHaveSnapshot() to, it must fail the test. But don’t worry: the next time you run your tests, Playwright will compare the current state of the page to the stored snapshot.

So run your tests a second time:

npx playwright test

Success! You should see a message like this:

Running 1 test using 1 worker

1 passed (6.6s)

If your test failed, take a few minutes to see if you can fix the issue. If not, continue reading. I cover numerous troubleshooting tips later in this article.

Where are snapshots stored?

By default, test snapshots are stored alongside the test file that created them.

When a visual test fails, Playwright will store the “before” image, the “after” image, and a “diff” image in the ./test-results directory. These will come in handy later, for debugging your failed tests.

(Keep reading for advice on configuring the location of these snapshots. You probably don’t want test output littering your codebase.)

4. Updating test snapshots

Awesome! Your test now confirms there haven’t been any changes to the page, but what if you actually want to make some changes?

To update your snapshots, run your tests with the --update-snapshots (or -u) flag:

npx playwright test -u

This command will run your tests and update the snapshots to match the current state of the page. If you run this now, you should expect a passing result.

A word of caution: This command updates all snapshots. Be very careful, as it’s easy to accidentally update snapshots you didn’t intend to.

How do I update only some snapshots?

To update the snapshots for a subset of tests, we can filter tests from the CLI. Only matching tests will be updated.

# Update tests with matching file name

npx playwright test -u "**/home*.spec.ts"

# Update tests with matching test name

npx playwright test -u --grep "home" --grep-invert "zzz"

# Updates tests within project

npx playwright test -u --project "chromium"

We’ll go deeper into snapshot management later, but for now, let’s focus on debugging…

5. Debug visual tests

We know how to update failing tests’ snapshots, but what about when a test is failing because of a bug?

To explore this scenario, let’s update our test so that our snapshot fails:

test('home page visual test', async ({page}) => {

await page.goto('https://www.browsercat.com');

await expect(page).toHaveScreenshot({

// crop the screenshot to a specific area

clip: {x: 0, y: 0, width: 500, height: 500},

});

});

Now let’s run the tests, asking playwright to produce an HTML report:

npx playwright test --reporter html

After that command, your browser should have opened to your test report. And it should show a failed result.

Scroll down to the bottom of the page, and you’ll see this widget:

The “Diff” view offers a stark comparison between the expected and actual screenshots. However, I find the “Slider” view to be the most useful for actually fixing issues.

How do I interpret the “diff” view?

The “diff” view shows the difference between the expected and actual screenshots.

Yellow pixels vary between snapshots, but they fall within the allowed threshold for differences.

Red pixels fall outside the allowed threshold for differences. By default, even a single red pixel will fail your test.

For advice on configuring these thresholds, jump to Make Visual Tests More Forgiving.

Do I need to use the HTML report?

No, you don’t have to use the HTML report to run visual tests (though I recommend it). Whether you generate an HTML report, failing visual tests will automatically output the “before,” “after,” and “diff” images to the ./test-results directory.

Often, I’ll open the “before” image directly in VSCode while I work. That way I can keep my browser focused on my development app.

6. Use UI mode for quick visual testing

Playwright has an industry-leading “UI Mode” that makes working with tests a breeze.

While it has numerous killer features, my favorite is that it automatically snapshots the test after every single step. This allows you to quickly debug your tests. It also makes it very easy to spot the right places to insert a visual assertion.

To run your tests in UI mode, use the following command:

npx playwright test --ui

I encourage you to explore UI mode, as it has a wealth of useful features.

OK! Now that you’ve got a feel for working with visual tests, let’s take you from white belt to black belt. In the process, we’ll leverage some awesome Playwright features, write durable tests, finely-tune our config, and more.

Page vs. Element Snapshots

Playwright’s visual testing API allows you to take snapshots of the entire page or just a specific element.

But when is the right time to use one over the other?

When should I use page snapshots?

Page snapshots are excellent for verifying the entire page works as expected. Use page snapshots to test layout, responsiveness, and accessibility.

But be warned: Page snapshots can be flaky. After all, if anything within the viewport changes, the entire snapshot will fail. We’ll cover strategies for minimizing these effects later, but for now, it’s wise to consider page snapshots a powerful, blunt instrument.

You’ve already seen a page snapshot in action. Here’s a refresher:

test('page snapshot', async ({page}) => {

await page.goto('https://www.browsercat.com');

await expect(page).toHaveScreenshot();

});

When should I use element snapshots?

Element snapshots, as you expect, focus exclusively on a single page element. This makes them an excellent choice for testing components in isolation, or for verifying an element behaves as expected within a certain context.

Element snapshots are substantially less brittle than page snapshots, but they require a bit more overhead to set up. After all, their narrow targeting means you need more of them to cover the same surface area as a page snapshot.

Here’s an example of an element snapshot:

test('element snapshot', async ({page}) => {

await page.goto('https://www.browsercat.com');

const $button = page.locator('button').first();

await expect($button).toHaveScreenshot();

});

Working with Page Snapshots

Let’s explore some useful features and common use-cases for page snapshots…

Cropping Page Snapshots

Sometimes the entire viewport isn’t necessary to prove your test passes. And sometimes a portion of the viewport changes frequently by design, turning an otherwise great test into a flake.

In these cases, it’s best to crop your snapshot to the area of interest. Here’s an example:

test('cropped snapshot', async ({page}) => {

await page.goto('https://www.browsercat.com');

const {width, height} = page.viewportSize();

await expect(page).toHaveScreenshot({

// square at the center of the page

clip: {

x: (width - 400) / 2,

y: (height - 400) / 2,

width: 400,

height: 400,

},

});

await expect(page).toHaveScreenshot({

// top slice, maximum possible width

clip: {x: 0, y: 0, width: Infinity, height: 16},

});

});

Snapshot the Entire Page

By default, Playwright takes a snapshot of the current viewport. This is typically what you want, as the larger the snapshot is, the more likely it is for your test to fail.

However, full page snapshots have their place. For example, if you’re testing that a page looks the same across different browsers, the the easiest solution is to snapshot the entire page. And since for this kind of test, you aren’t storing the snapshot from previous runs, you’re not going to end up with an overly flaky test.

Here’s how you take a full page snapshot:

test('full page snapshot', async ({page}) => {

await page.goto('https://www.browsercat.com');

await expect(page).toHaveScreenshot({

fullPage: true,

});

});

Scroll Before Taking a Page Snapshot

When working with page snapshots, you’ll often want to scroll the page before the visual assertion.

Here’s how:

test('scroll before snapshot', async ({page}) => {

await page.goto('https://www.browsercat.com');

await page.evaluate(() => {

document

.querySelector('#your-element')

?.scrollIntoView({behavior: 'instant'});

});

await expect(page).toHaveScreenshot();

});

Note: While Playwright has a .scrollIntoViewIfNeeded() method, it will not scroll the element to the top of the viewport. So I recommend the solution above. It will make full use of your viewport and ensure your snapshot is consistent between runs.

Working with Element Snapshots

Element snapshots are much more “in the weeds” than page snapshots. They bring a lot of power and flexibility.

Let’s explore some examples…

Test Element Interactivity

As your component library grows, it becomes harder and harder to keep track of every state of every element in your library.

In the following example, we snapshot a form input across various states:

test('element states', async ({page}) => {

await page.goto('https://www.browsercat.com/contact');

const $textarea = page.locator('textarea').first();

await expect($textarea).toHaveScreenshot();

await $textarea.hover();

await expect($textarea).toHaveScreenshot();

await $textarea.focus();

await expect($textarea).toHaveScreenshot();

await $textarea.fill('Hey, cool cat!');

await expect($textarea).toHaveScreenshot();

});

Test Element Responsiveness

When working with responsive designs, it’s important to ensure your elements look good across the full range of screen sizes.

Use element snapshots to ensure your components look good at various breakpoints.

test('element responsiveness', async ({page}) => {

const viewportWidths = [960, 760, 480];

await page.goto('https://www.browsercat.com/blog');

const $post = page.locator('main article').first();

for (const width of viewportWidths) {

await page.setViewportSize({width, height: 800});

await expect($post).toHaveScreenshot(`post-${width}.png`);

}

});

Advanced Snapshot Techniques

Page and element snapshots share many common configuration options. Let’s explore the most useful among them…

Masking Portions of a Snapshot

Sometimes, you’ll want to exclude certain portions of a snapshot. A sub-element or sub-region may change frequently, contain sensitive information, or be irrelevant to the test. For example, a timestamp, an animation, a user email address, or a rotating ad.

Playwright provides the ability to “mask” these areas, replacing them with a consistent bright color unlikely to be confused for the content of your site.

Here’s an example:

test('masked snapshots', async ({page}) => {

await page.goto('https://www.browsercat.com');

const $hero = page.locator('main > header');

const $footer = page.locator('body > footer');

await expect(page).toHaveScreenshot({

mask: [

$hero.locator('img[src$=".svg"]'),

$hero.locator('a[target="_blank"]'),

],

});

await expect($footer).toHaveScreenshot({

mask: [

$footer.locator('svg'),

],

});

});

And here’s what the first masked snapshot looks like:

Keeping Styles Constant During Snapshots

Visual tests are valuable because they catch unexpected changes to your app’s appearance. Some page elements are too unreliable to include as-is.

Thankfully, we can include some basic CSS for the duration of a snapshot that restrains or hides troublesome elements from the page.

Here’s how:

test('consistent styles', async ({page}) => {

await page.goto('https://www.browsercat.com');

const $hero = page.locator('main > header');

await expect(page).toHaveScreenshot({

stylePath: [

'./hide-dynamic-elements.css',

'./disable-scroll-animations.css',

],

});

await expect($hero).toHaveScreenshot({

stylePath: [

'./hide-dynamic-elements.css',

'./disable-scroll-animations.css',

],

});

});

Auto-Retry Flaky Snapshots

When working with animations or dynamic content, your visual tests can become flaky. Large page snapshots are particularly susceptible.

Playwright can automatically retry failed visual tests for a certain duration, until it finds a valid match. Enable the feature like so:

test('retry snapshots', async ({page}) => {

await page.goto('https://www.browsercat.com');

const $hero = page.locator('main > header');

await expect(page).toHaveScreenshot({

// retry snapshot until timeout is reached

timeout: 1000 * 60,

});

await expect($hero).toHaveScreenshot({

// retry snapshot until timeout is reached

timeout: 1000 * 60,

});

});

Visual Tests for Generated Images

99.9% of the time, page and element snapshots will cover your use-case. But there are times when you’ll want to assert an arbitrary image is consistent across test runs.

For example, perhaps your application generates QR codes or social share cards. Or perhaps you compress and transform user-uploaded avatars. You’ll want to ensure this functionality doesn’t break.

Use expect().toMatchSnapshot() for this:

import {test, expect} from '@playwright/test';

import {buffer} from 'stream/consumers';

test('arbitrary snapshot', async ({page}) => {

// generates custom avatars — fun!

await page.goto('https://getavataaars.com');

await page.locator('main form button').first().click();

// download the avatar

const avatar = await page.waitForEvent('download')

.then((dl) => dl.createReadStream())

.then((stream) => buffer(stream));

expect(avatar).toMatchSnapshot('avatar.png');

});

Compare Snapshots Across Browsers

All of the tests we’ve written thus far compares the state of your app before and after code changes. But what if you want to compare the state of your app across different browsers and devices?

To accomplish this, we’re going to lean on Playwright’s “projects” functionality. Projects allow you to define custom test suites with unique configuration. In a mature codebase, you may have quite a lot of these for different devices, environments, and testing strategies.

Let’s make some magic!

First, update your playwright.config.ts. If you don’t have one yet, create it at the root of your project:

const crossBrowserConfig = {

testDir: './tests/cross-browser',

snapshotPathTemplate: '.test/cross/{testFilePath}/{arg}{ext}',

expect: {

toHaveScreenshot: {maxDiffPixelRatio: 0.1},

},

};

export default defineConfig({

// other config here...

projects: [

{

name: 'cross-chromium',

use: {...devices['Desktop Chrome']},

...crossBrowserConfig,

},

{

name: 'cross-firefox',

use: {...devices['Desktop Firefox']},

dependencies: ['cross-chromium'],

...crossBrowserConfig,

},

{

name: 'cross-browser',

use: {...devices['Desktop Safari']},

dependencies: ['cross-firefox'],

...crossBrowserConfig,

},

],

});

Notice how we’re specifically configuring the snapshotPathTemplate to store the snapshots for all browsers in the same location. This will ensure that each test compares its snapshots to the same source images.

Next, create a new test file at ./tests/cross-browser/homepage.spec.ts:

import {test, expect} from '@playwright/test';

test('cross-browser snapshots', async ({page, }) => {

await page.goto('https://www.browsercat.com');

await page.locator(':has(> a figure)')

.evaluate(($el) => $el.remove());

await expect(page).toHaveScreenshot(`home-page.png`, {

fullPage: true,

});

});

To avoid a fail, let’s initialize our new snapshots:

npx playwright test --project cross-browser -u

And then let’s run the tests:

npx playwright test --project cross-browser

Did all of your tests pass? Depending on your environment, they may not have! Different browsers render fonts, colors, and images differently, even when your app is functioning as expected.

If your tests failed, you may need to tweak the maxDiffPixelRatio and threshold options for your snapshots. If you want to debug this issue right now, jump to Make Visual Tests More Forgiving.

Configuring Visual Tests

Because visual tests are so sensitive, it’s important to have a good handle on their configuration. Let’s explore some of the most useful options…

Custom Snapshot File Names

Playwright automatically names your snapshots based on the name of the test. If you’re only using snapshots for visual testing, that’s fine.

However, many users like to repurpose these images for documentation, style guides, and deployment reports. These tasks demand consistent file names.

Name your snapshots like so:

test('custom snapshot names', async ({page}) => {

await page.goto('https://www.browsercat.com');

const $hero = page.locator('main > header');

await expect(page).toHaveScreenshot('home-page.png');

await expect($hero).toHaveScreenshot('home-hero.png');

const $footer = page.locator('body > footer');

const footImg = await $footer.screenshot();

expect(footImg).toMatchSnapshot('home-foot.png')

});

Note: Custom snapshot names don’t completely control the file name unless you also configure custom directories. With the default configuration, Playwright adds a suffix to your file names to ensure they’re unique across projects.

Read on for how to customize the snapshot directory structure…

Custom Snapshot Directories

By default, Playwright stores snapshots in the same directory as the test file that created them. This method has numerous shortcomings:

- It makes navigating your codebase more difficult.

- It’s hard to exclude snapshots from version control.

- It’s not easy to cache results between CI/CD runs.

- And it gets in the way of custom snapshot file names.

So let’s tell Playwright to store our snapshots in a custom directory. Update your playwright.config.ts:

export default defineConfig({

snapshotPathTemplate: '.test/snaps/{projectName}/{testFilePath}/{arg}{ext}',

});

With the above configuration, if our test file is stored at ./tests/homepage.spec.ts, the Playwright will store our snapshots like so:

.test/

snaps/

tests/

homepage.spec.ts/

home-page.png

home-hero.png

home-foot.png

With this pattern, you can exclude your snapshots from version control, you can cache your results in CI/CD, and you can easily review failed tests as they arise.

Read more about the your options in the Playwright docs for snapshot path templates.

Make Visual Tests More Forgiving

Out of the box, visual tests are very strict. If a single pixel fails, your test fails. Thankfully, Playwright provides numerous controls for tuning how sensitive your visual tests should be.

Here are your options:

threshold: How much must a single pixel vary for it to be considered different. Values are a percentage from0to1, with0.2as the default.maxDiffPixels: The maximum number of pixels that can differ while still passing the test. By default, this option is disabled.maxDiffPixelRatio: The maximum percentage of pixels that can differ while still passing the test. Values are a percentage from0to1, but this control is disabled by default.

You can tune these options globally or per-assertion.

Configure forgiveness globally

To configure your snapshot options globally, update your playwright.config.ts:

export default defineConfig({

expect: {

toHaveScreenshot: {

threshold: 0.25,

maxDiffPixelRatio: 0.025,

maxDiffPixels: 25,

},

toMatchSnapshot: {

threshold: 0.25,

maxDiffPixelRatio: 0.025,

maxDiffPixels: 25,

}

},

});

Override forgiveness per-assertion

To override your global snapshot options, update your test file:

test('forgiving snapshots', async ({page}) => {

await page.goto('https://www.browsercat.com');

const $hero = page.locator('main > header');

await expect(page).toHaveScreenshot({

maxDiffPixelRatio: 0.15,

});

await expect($hero).toHaveScreenshot({

maxDiffPixels: 100,

});

});

How do I tune these options?

To converge on the correct setting for your app, review the failing “diff” images produced by your tests. In those images, yellow pixels indicate pixels that are within the threshold allowance, while red pixels fail the test.

First try to increase threshold, and see if you can gobble up the red pixels in your snapshots. But be careful: a high threshold will cause false negatives. I wouldn’t go any higher than 0.35, and that’s high.

If threshold doesn’t work, focus on maxDiffPixelRatio and maxDiffPixels. And consider the trade-offs these options entail.

Since maxDiffPixelRatio is relative to image size, it’s more likely to yeild good results across a wide range of images. This makes it a good contender for a global setting… but only if you set it conservatively! After all, 10% of a full page image will allow a lot of variation.

On the other hand, since maxDiffPixels is a constant value, it gives you much better control on individual tests. But it’s also much more risky if applied globally, even at a low setting. For small images, a high maxDiffPixels might constitute a huge percentage of the image.

Visual testing in CI/CD

Almost there! You’ve got your visual tests running locally, and you’re confident in their results. Now it’s time to get them running in your CI/CD pipeline.

You may be surprised to discover this is no easy task. After all, you need some way to store the snapshots between runs, and you need some way to let your pipeline know when it’s OK to update the snapshot cache.

Thankfully, I took care of all this for you. :)

For this example, I’m going to assume you’re using GitHub Actions. But the process is similar for other CI/CD providers.

Let’s get started!

1. Configure Playwright for CI/CD

Your CI/CD environment is not like your local environment, and we should account for that in our Playwright configuration.

Update your playwright.config.ts with the following options. Feel free to merge these with any other options you’ve set so far:

import {defineConfig, devices} from '@playwright/test';

const isCI = !!process.env.CI;

export default defineConfig({

timeout: 1000 * 60,

workers: isCI ? 1 : '50%',

retries: isCI ? 2 : 0,

forbidOnly: isCI,

outputDir: '.test/spec/output',

snapshotPathTemplate: '.test/spec/snaps/{projectName}/{testFilePath}/{arg}{ext}',

testMatch: '*.spec.{ts,tsx}',

reporter: [

['html', {

outputFolder: '.test/spec/results',

open: 'never',

}],

isCI ? ['github'] : ['line'],

],

});

Here’s what’s going on:

- The top few options account for the limited resources available in a CI environment. Tests will run slower, and they’ll be a little more flaky, so we need to adapt accordingly.

- The next batch of options organize all of your tests’ outputs into the

.testdirectory. This will make it easy to cache. Add.test/to your.gitignorefile. None of this stuff should be committed to your repo. - Lastly, we’re going to generate

htmlandgithubreports in CI/CD. Thehtmlreport will be useful for debugging, and thegithubreport will be useful for reviewing results with minimal output.

2. Run your tests in CI/CD

Next, let’s create a basic pipeline. It doesn’t do everything (yet), but it’s a solid foundation to work from.

Create a file at .github/workflows/visual-tests.yml:

name: Visual Tests

on:

push:

branches:

- "*"

pull_request:

branches:

- "*"

jobs:

run-tests:

runs-on: ubuntu-latest

timeout-minutes: 15

steps:

- name: Checkout

uses: actions/checkout@v4

- name: Set up Node

uses: actions/setup-node@v3

with:

cache: pnpm

node-version-file: .nvmrc

check-latest: true

- name: Install deps

run: |

npm install

npx playwright install

- name: Test

run: npx playwright test --ignore-snapshots

Notice that we’re running our tests with the --ignore-snapshots flag. Since we have no way to store snapshots between test runs, this is literally the only way to get our tests to pass.

If you push your repo to Github now, this workflow will run, and it will pass, but it won’t guarantee anything about your app.

Let’s remedy that…

3. Store snapshots between runs

For our visual tests to work, we’ll need to store snapshots between runs. For this, we’ll cache them using Github’s “artifacts” feature.

Second, let’s also write a step that generates new snapshots if they don’t exist. This will solve the problem of our tests failing the first time they run on a branch.

Third, let’s stop ignoring our snapshot assertions.

Here’s the updates:

- name: Install deps

run: |

npm install

npx playwright install

- name: Set up cache

id: cache

uses: actions/cache@v4

with:

key: cache/${{github.repository}}/${{github.ref}}

restore-keys: cache/${{github.repository}}/refs/heads/master

path: .test/**

- name: Initialize snapshots

if: ${{steps.cache.outputs.cache-hit != 'true'}}

run: npx playwright test --update-snapshots

- name: Test

run: npx playwright test

Notice that our cache key includes the current branch as well as a fallback to the master branch. That way a new branch has some material to work from on the first push.

If you trigger the pipeline now, on the first run, you’ll see snapshots generated, the tests pass, and the cache updated. On subsequent runs, you’ll see the cache hit, no new snapshots generated, and the tests still pass.

Not bad, but what happens when the snapshots need to be updated?

4. Trigger a snapshot update

Now that we have visual tests that really work, let’s change our workflow so that we can update snapshots on demand.

First, let’s parameterize our workflow…

on:

push:

branches:

- "*"

pull_request:

branches:

- "*"

# Allow updating snapshots during manual runs

workflow_call:

inputs:

update-snapshots:

description: "Update snapshots?"

type: boolean

# Allow updating snapshots during automatic runs

workflow_dispatch:

inputs:

update-snapshots:

description: "Update snapshots?"

type: boolean

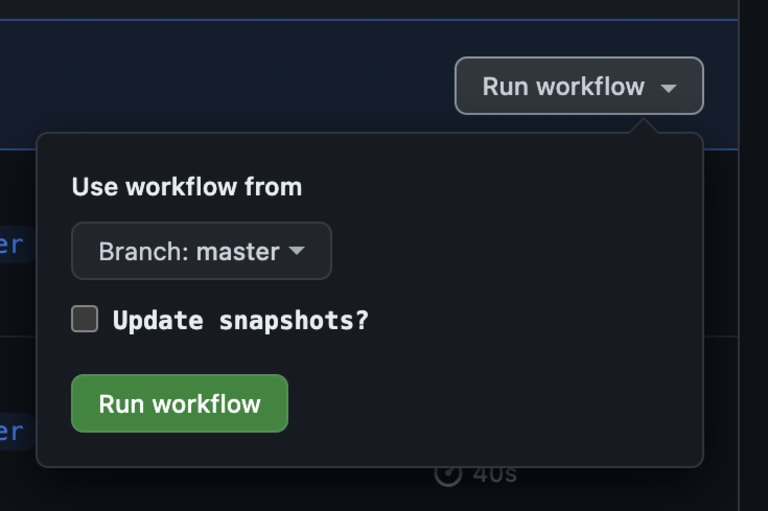

workflow_call allows us to manually trigger the workflow with or without updating snapshots. workflow_dispatch allows other Github workflows the same set of options.

Here’s what the manual widget looks like:

Let’s also update “Initialize snapshots” to trigger an update when this parameter is enabled:

- name: Initialize snapshots

if: ${{steps.cache.outputs.cache-hit != 'true' || inputs.update-snapshots == 'true'}}

run: npx playwright test --update-snapshots --reporter html

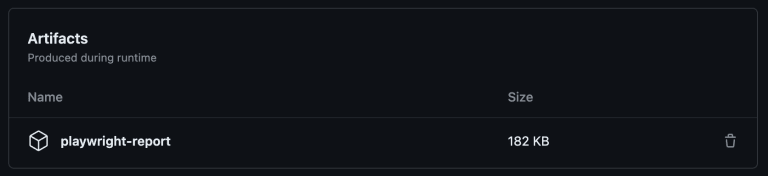

Lastly, let’s upload our HTML report as an artifact, so that we can reference it before deciding if we should ask the workflow to update snapshots… or if we need to debug the problem:

- name: Test

continue-on-error: true

run: npx playwright test

- name: Upload test report

if: always()

uses: actions/upload-artifact@v4

with:

name: playwright-report

path: .test/spec/results/

retention-days: 30

overwrite: true

Notice that our “Test” step now enables continue-on-error, so that we can upload our report even if the tests fail.

The new “Upload test report” step uploads the report as an artifact. In the workflow results, you’ll see a direct link to a zip file containing our report.

5. Speed up your CI/CD pipeline… a lot!

Things are going great, but there’s one more thing we can do to make visual tests even better: make them blazingly fast!

You’ve probably noticed two steps are slowing your pipeline down:

- Installing Playwright browsers.

- Running tests serially.

Thankfully, we can fix both of these issues, and most-likely for free!

BrowserCat hosts a fleet of Playwright browsers in the cloud that you can connect to with just a few lines of code.

If you do so, you won’t have to install browsers in your CI/CD environment, and you’ll be able to run your tests entirely in parallel.

And the best part? BrowserCat has an awesome forever-free plan. Unless you’re running a huge team, you’ll probably never have to pay a dime.

So let’s get started!

First, sign up for a free account at BrowserCat.

Second, create an API key and store it as a secret named BROWSERCAT_API_KEY in your Github repository. You can do this by navigating to your repository, clicking “Settings,” then “Secrets,” then “Actions,” then “New repository secret.”

Third, let’s update playwright.config.ts to use BrowserCat:

const isCI = !!process.env.CI;

const useBC = !!process.env.BROWSERCAT_API_KEY;

export default defineConfig({

timeout: 1000 * 60,

workers: useBC ? 10 : isCI ? 1 : '50%',

retries: useBC || isCI ? 2 : 0,

maxFailures: useBC && !isCI ? 0 : 3,

forbidOnly: isCI,

use: {

connectOptions: useBC ? {

wsEndpoint: 'wss://api.browsercat.com/connect',

headers: {'Api-Key': process.env.BROWSERCAT_API_KEY},

},

},

});

In the updates above, you’ll notice we’ve increased parallelization to 10 workers when using BrowserCat. This is a good starting point, but you can increase it significantly more without issue.

Notice that this config connects to BrowserCat whenever BROWSERCAT_API_KEY is defined. This is useful for running tests locally, as well as in CI/CD.

OK, now here’s the last bit of magic… Let’s stop installing Playwright’s browsers every time we run our pipeline:

- name: Install deps

run: npm install

If you run your pipeline now, you’ll witness a dramatic speed-up, even with just a single test in your suite. And with 1000 free credits per month, you can run your pipeline for hours on end with plenty of time left over.

Next steps…

Whew! You’ve come a long way.

At this point, the only thing you can do to improve your visual testing skills is to practice them. So go forth and snapshot!

Here’s some great links to help you on your way:

- Playwright Visual comparisons

- expect(page).toHaveScreenshot()

- expect(locator).toHaveScreenshot()

- expect(image).toMatchSnapshot()

- TestConfig.expect

- BrowserCat Blog

Happy automating!

Automate Everything.

Tired of managing a fleet of fickle browsers? Sick of skipping e2e tests and paying the piper later?

Sign up now for free access to our headless browser fleet…